This post is, in a way, one of a series, starting with .NET 5, and following with .NET 6. In each one I discuss how to squeeze the maximum performance out of .NET UDP sockets, and look at the changes made by the .NET team to advance UDP performance in each of the releases.

At Enclave, we create encrypted tunnels between systems to move Layer2/3 ethernet frames, and we prefer to do this over UDP. This means we spend a lot of time thinking about our UDP performance, because the speed at which we can process UDP packets directly correlates with our throughput at the virtual network level.

Unreliable UDP datagrams are the preferred transport for tunnelled traffic because it’s highly likely that tunnels will themselves carry encapsulated TCP traffic. Carrying encapsulated TCP traffic inside a TCP connection can lead to a mismatch of timers, such that upper and lower TCP tunnels mismatch creating a situation where the upper layer can queue up more retransmissions than the lower layer process adversely impacting throughput of the link. For a more in-depth explanation of the TCP over TCP problem, see Why TCP Over TCP Is A Bad Idea by Olaf Titz.

I’m particularly excited about .NET 8 being released, specifically because of this PR in the dotnet runtime, which addresses my last remaining allocation-frustration with .NET UDP sockets, which I mentioned in my .NET6 post, and means we can now actually achieve zero-allocation UDP receives! 🥳🥳

All the code used here for benchmarking UDP sockets can be found on GitHub; I’ve got one label for each dotnet version, so you can go back through the changes.

Fundamentals

Before I get to the good stuff, I’ll take a moment here to review our goals, and the fundamentals of high-performance UDP sockets. You can safely skip ahead here if you’ve already read one of the previous posts in the series.

We have three core goals when it comes to UDP sockets:

- Achieve as close to line speed as possible when sending and receiving.

- Try to use processor time efficiently and not waste cycles.

- Sustain large numbers of concurrent UDP sockets.

How do we achieve these? There are a few core considerations that help get us there:

-

Use Asynchronous IO; it’s pretty well-known now that to achieve a significant amount of concurrent IO in .NET, you need to use asynchronous IO. This ensures we aren’t using a single thread per-socket, and reduces thread-pool consumption. The .NET runtime basically takes care of using async UDP sockets correctly for us (or at least it has since .NET6).

-

Don’t copy data; we use a zero-copy pipeline all the way from UDP receive to surfacing a decrypted network packet at the virtual network adapter. We wield

Span<byte>andReadOnlySpan<byte>quite a lot to make that happen. -

Reduce allocations; when you’re trying to squeeze every last bit of CPU time, it’s worth trying to avoid allocating objects and memory from the heap where possible. This avoids both:

- The time it takes to actually allocate objects; the GC is fast, but allocating a new object from Gen0 still takes some time.

- Reduce GC pressure. The more you allocate, the more the GC has to clean up; again, this process is fast, but still consumes CPU time.

That last one is where .NET8 really shines with UDP sockets, and I’ll dive into that in more detail in a bit.

General improvements from .NET8

I think at every release of .NET I see across the board performance improvements just by building against the new version, and that remains true going from 7 to 8.

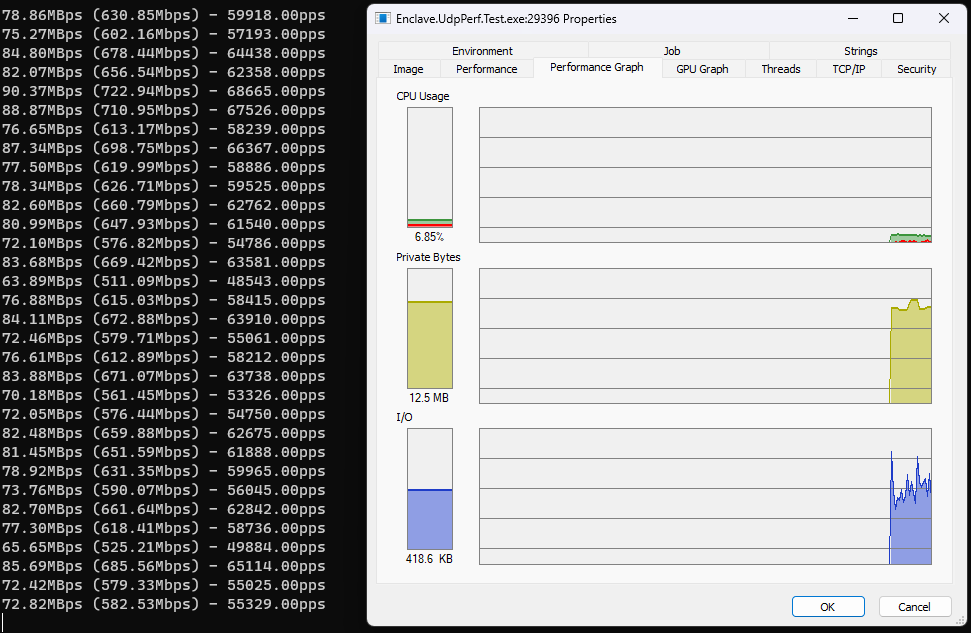

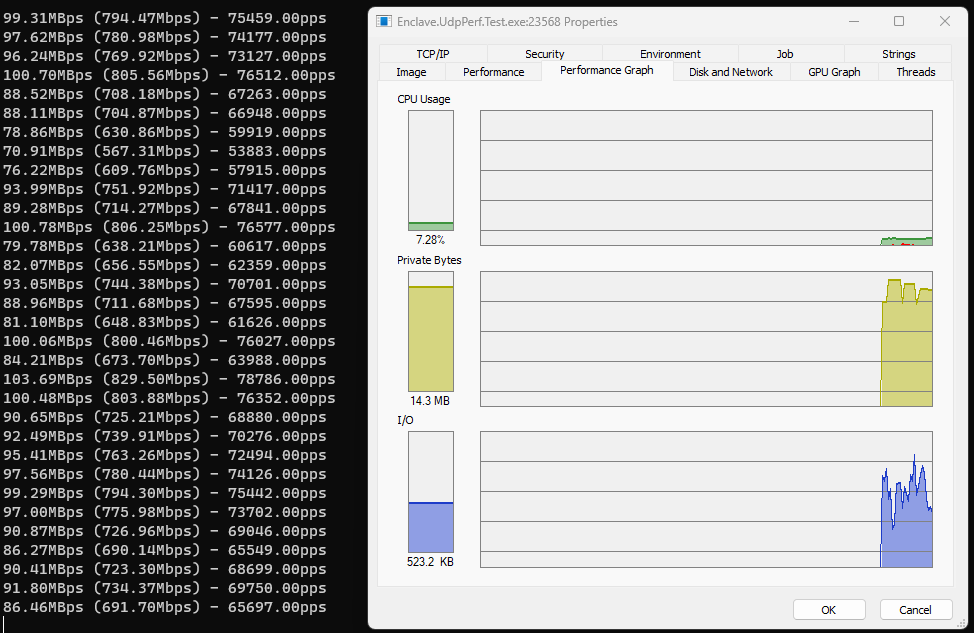

First, lets baseline the performance of .NET 7 UDP sockets, using a simple test application (available here). I’ve already applied as much of the approaches listed above as I can, so let’s see what we get.

In all these tests, I’m sending individual UDP packets with a data payload of 1380 bytes, which reflects a common ethernet packet size, particularly for UDP packets that contain encrypted ethernet frames. I’m running these tests on a 12-core Intel i7-9750H@2.60GHz, over a 1 Gigabit Ethernet Link. I’m only sending/receiving 1 packet at a time, rather than sending with all the available cores.

Here’s sending:

…and receiving:

So we’re already doing pretty well, quite frankly. We’re able to send ~60k pps (packets-per-second) through our UDP socket implementation, and receiving we’re able to pull ~75k pps off the wire.

The discrepancy in throughput here (why can I receive more than I can send??) is because both these tests are from the same machine, but with the roles reversed, rather than being the same test’s send/receive perspective.

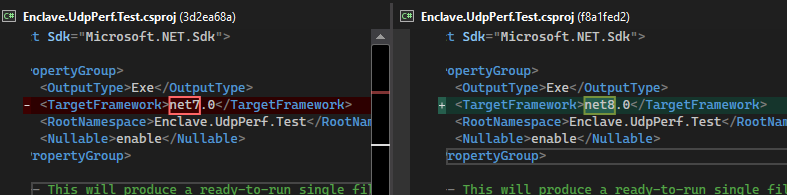

So this is already no slouch. Let’s see what happens just moving to .net8; literally this:

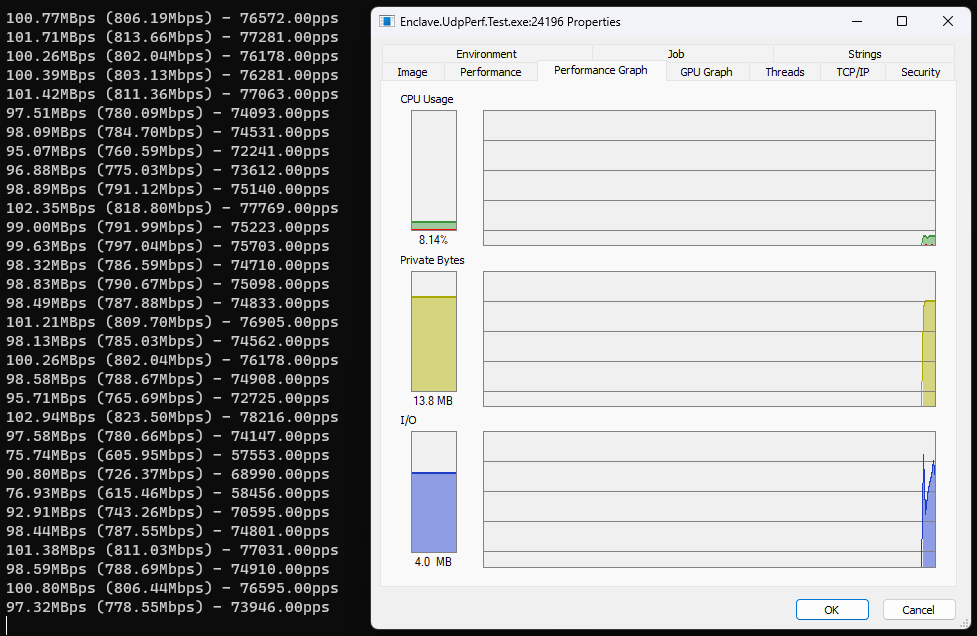

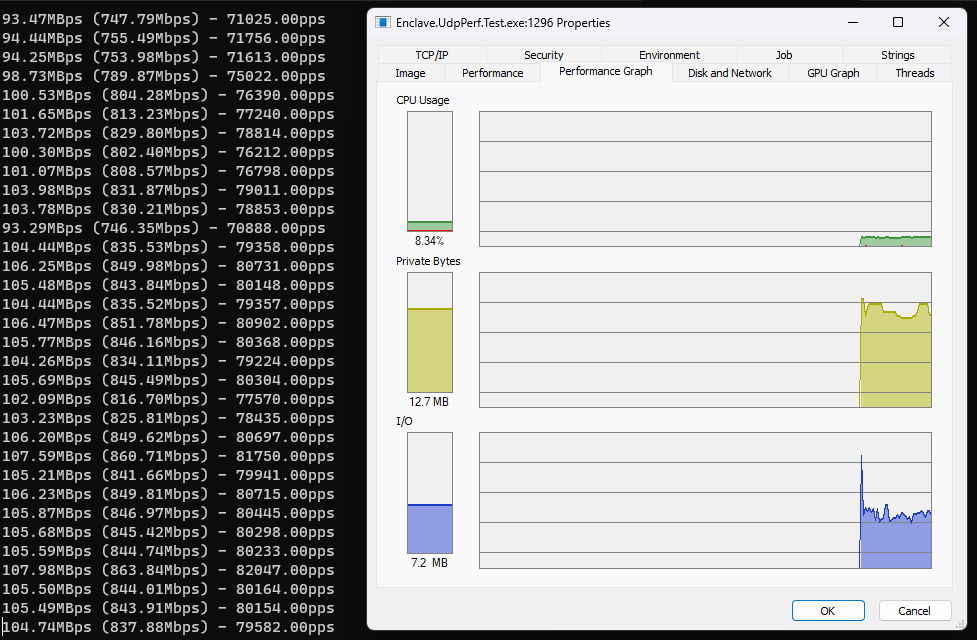

Same code, different .NET version, here’s the sending:

…and the receiving:

So even with no further code changes, we’re up to ~67k pps on the sending side (a gain of ~7k pps), and ~78k pps on the receiving side (a gain of ~3k pps). Not bad for a 1-character change to a project file.

Receive Allocations

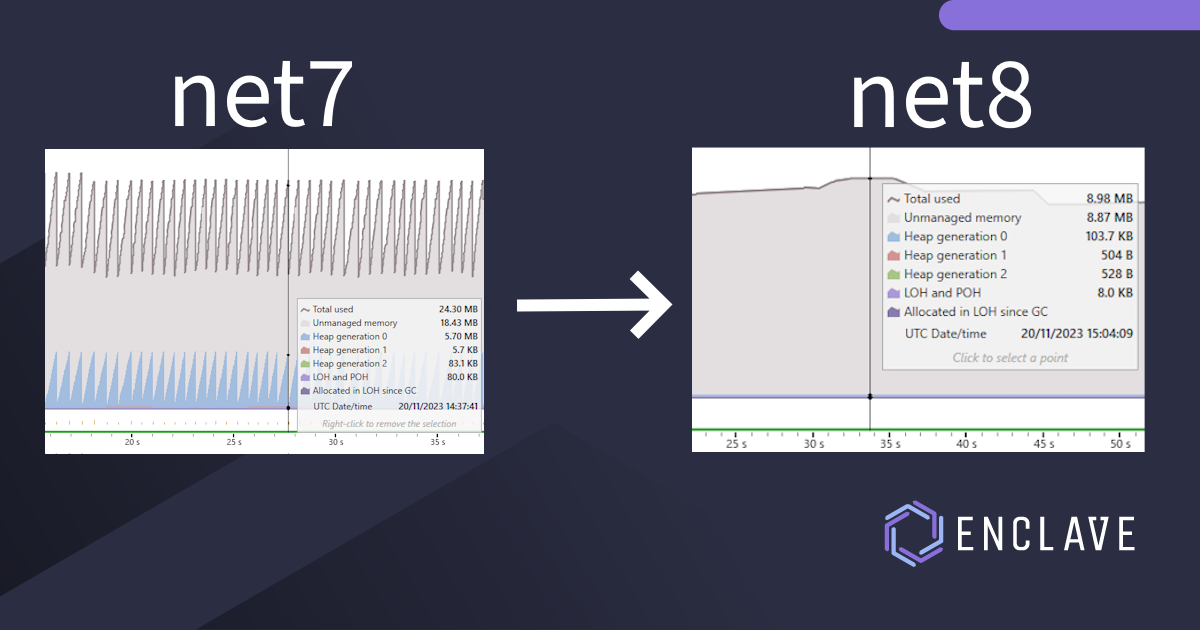

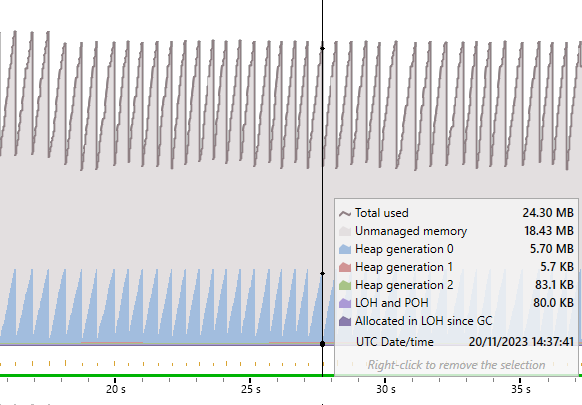

But there’s still a problem with the UDP receive path, and that is to do with the overload of Socket.ReceiveFromAsync I’m using. Let’s look at the memory allocation graph from the .NET8 UDP receive test:

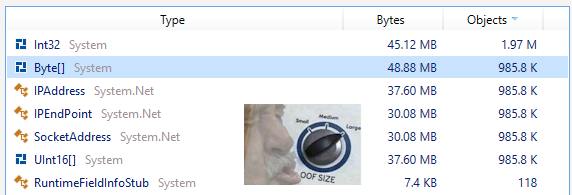

Check out that sawtooth! What’s going on here? Let’s take a look at the objects actually allocated during that time:

Ouch! A total of ~6.8M objects allocated! What’s causing that? Let’s look at the overload we’re using to receive data:

1

2

3

4

public ValueTask<SocketReceiveFromResult> ReceiveFromAsync(

Memory<byte> buffer,

EndPoint remoteEndPoint,

CancellationToken cancellationToken = default)

The returned SocketReceiveFromResult is pretty straightforward, and contains:

1

2

3

4

5

public struct SocketReceiveFromResult

{

public int ReceivedBytes;

public EndPoint RemoteEndPoint;

}

That RemoteEndPoint field is the real culprit here; populating it is the cause of a lot of our pain. Every time we call ReceiveFromAsync, an IPEndPoint must be created to populate that value, which in turn requires an IpAddress, and a number of data copies/boxing to place the OS SocketAddress data into the right format to create that.

Never mind the fact that each ReceiveFromAsync operation also needs its own SocketAddress instance.

The dotnet team has known about this problem for a little while, and excitedly, in .NET8 it was made possible to fix with this PR.

.NET8 introduced new overloads to the Socket class that lets you provide a reusable SocketAddress object to be used directly by the Socket class and filled with OS socket address information when the method returns:

1

2

3

4

5

ValueTask<int> ReceiveFromAsync(

Memory<byte> buffer,

SocketFlags socketFlags,

SocketAddress receivedAddress,

CancellationToken cancellationToken = default)

The docs for receivedAddress says that “it will be updated with the value of the remote peer”.

SocketAddress boils down to two fundamental properties

1

2

public AddressFamily Family { get; }

public Memory<byte> Buffer { get; }

The Family determines the size of the buffer, and the Buffer contains the raw OS socket address information after the receive has completed.

With these new overloads, we allocate our own SocketAddress instance, that can be reused every time we receive. The method then only has to return the number of bytes received. From the test app:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

private static async Task DoReceiveAsync(Socket udpSocket, ThroughputCounter throughput, CancellationToken cancelToken)

{

byte[] buffer = GC.AllocateArray<byte>(length: 65527, pinned: true);

Memory<byte> bufferMem = buffer.AsMemory();

// Allocate a `SocketAddress`.

var receivedAddress = new SocketAddress(udpSocket.AddressFamily);

while (!cancelToken.IsCancellationRequested)

{

try

{

// Provide our reusable SocketAddress.

// receivedAddress will be populated when the method returns.

var receivedBytes = await udpSocket.ReceiveFromAsync(

bufferMem,

SocketFlags.None,

receivedAddress);

throughput.Add(receivedBytes);

// More on this in a moment...

var endpoint = GetEndPoint(receivedAddress);

}

catch (SocketException)

{

break;

}

}

}

Getting the EndPoint

To some extent, just taking away SocketReceiveResult so we don’t have to create an IPEndPoint and the associated data on every packet, then benchmarking that, is sort of cheating, because we’re doing less work, and not getting the IP address and port information we’d normally need.

So, to get like-for-like we need to also get the endpoint information, but with this reusable SocketAddress. The nice thing is, we can now cache a created EndPoint, using the contents of SocketAddress as a key, so we don’t need to allocate every time.

Conveniently, the built-in implementation of SocketAddress.Equals compares the contents of the Buffer property, so we can use that as a key in our dictionary without additional code.

A ‘default’ instance of IPEndPoint lets us create new EndPoint instances from a SocketAddress, so we end up with a GetEndPoint method that looks like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

private static Dictionary<SocketAddress, EndPoint> _endpointLookup = new Dictionary<SocketAddress, EndPoint>(new SocketAddressContentsComparer());

private static IPEndPoint _endpointFactory = new IPEndPoint(IPAddress.Any, 0);

private static EndPoint GetEndPoint(SocketAddress receivedAddress)

{

if (!_endpointLookup.TryGetValue(receivedAddress, out var endpoint))

{

// Create an EndPoint from the SocketAddress

endpoint = _endpointFactory.Create(receivedAddress);

// Copy the SocketAddress to be our stored key.

var lookupCopy = new SocketAddress(receivedAddress.Family, receivedAddress.Size);

receivedAddress.Buffer.CopyTo(lookupCopy.Buffer);

_endpointLookup[lookupCopy] = endpoint;

}

return endpoint;

}

In reality, using a simple

Dictionaryfor storing this value would be unwise, since it can grow continuously over time; a more sensible caching approach should be used that ages out records from the cache based on last-use time or whether the cached address is still in use. Our approach is to look-up a udp “session” object based on theSocketAddressdirectly, so it lives only as long as the application is using the session.

The Results

Ok, so we’ve now changed our ReceiveFromAsync overload, and we’re still able to get the IPAddress and port if we need it from each returned packet. Let’s run our receive tests again.

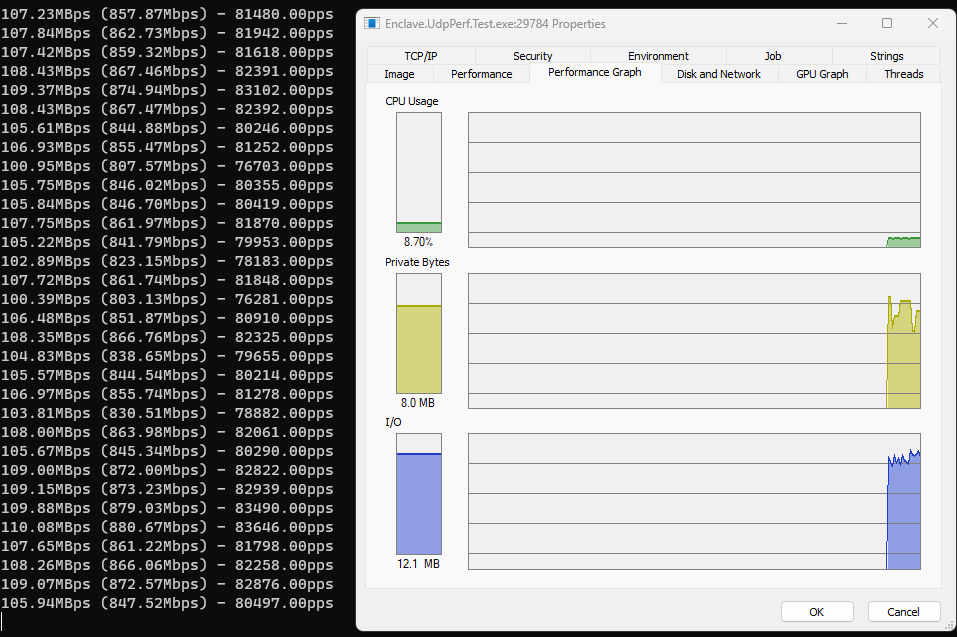

Superb, we’re now up to an average of ~81k pps. That’s a gain of 3k pps by using the new overload, and a gain of 6k pps over .NET7.

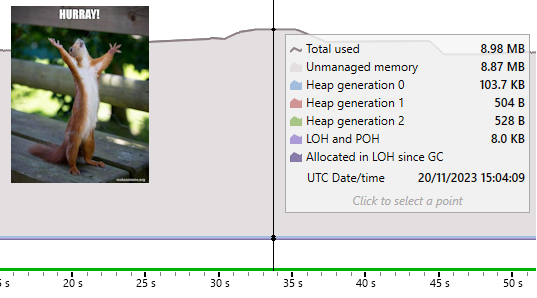

But the real joy comes from the new allocation graph during that test:

That’s more like it! No activity in Gen0 at all, meaning we’ve reached our goal of zero-allocation UDP sockets.

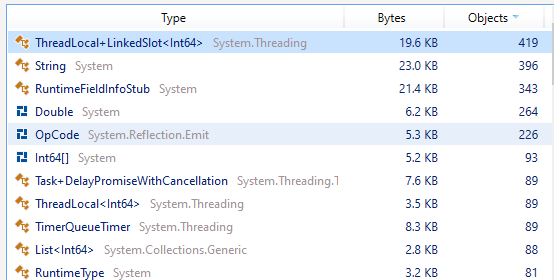

For completeness, there’s now no IPEndPoint to be seen in the list of most-allocated objects:

Wrapping Up

As I said at the top, you can find my test application here; I look forward to re-running these tests on .NET 9 in a year’s time!